Der vollständige Leitfaden für Web Scraping mit Java

Raluca Penciuc on Jul 08 2021

As opposed to the "time is money" mentality of the 20th century, now it's all about data. Particularly in the last decade, web scrapers have become extremely popular. It’s not hard to understand why - the Internet is brimming with valuable information that can make or break companies.

As companies are becoming aware of data extraction's benefits, more and more people are learning how to build their own scraper. In addition to having the potential to boost business, it may also act as a neat project for developers to improve their coding skills.

If you’re on team Java, but your work has nothing to do with web scraping, you will learn about a new niche where you can put your skills to good use. The article will provide a step-by-step tutorial on creating a simple web scraper using Java to extract data from websites and then save it locally in CSV format.

Verstehen von Web Scraping

What does web scraping refer to? Many sites do not provide their data under public APIs, so web scrapers extract data directly from the browser. It’s a lot like a person copying text manually, but it’s done in the blink of an eye.

When you consider that better business intelligence means better decisions, this process is more valuable than it seems at first glance. Websites are producing more and more content, so doing this operation entirely by hand is not advisable anymore.

You might be wondering, "What am I going to do with this data?". Well, let's see a few of the use cases where web scraping can really come in handy:

- Lead generation: an ongoing business requires lead generation to find clients.

- Price intelligence: a company's decision to price and market its products will be informed by competitors’ prices.

- Machine learning: to make AI-powered solutions work correctly, developers need to provide training data.

Detailed descriptions and additional use cases are available in this well-written article that talks about the value of web scraping.

Despite understanding how web scraping works and how it can increase the effectiveness of your business, creating a scraper is not that simple. Websites have many ways of identifying and stopping bots from accessing their data.

Here are some examples:

- Completely Automated Public Turing Tests (CAPTCHAs): These logical problems are reasonably easy to solve for people but a significant pain for scrapers.

- IP blocking: if a website determines multiple requests are coming from the same IP address, it can block access to that website or greatly slow you down.

- Honeypots: invisible links that are visible to bots but invisible to humans; once the bots fall for the trap, the website blocks their IP address.

- Geo-blocking: the website may geo-block certain content For instance, you may be given regionally specific information when you asked for input from another area (for example, plane ticket prices).

Dealing with all these hurdles is no small feat. In fact, while it’s not too hard to build an OK bot, it’s damn difficult to make an excellent web scraper. Thus, APIs for web scraping became one of the hottest topics in the last decade.

WebScrapingAPI collects the HTML content from any website and automatically takes care of the problems I mentioned earlier. Furthermore, we are using Amazon Web Services, which ensures speed and scalability. Sounds like something you might like? Start your free WebScrapingAPI trial, and you will be able to make 5000 API calls for the first 14 days.

Das Web verstehen

To understand the Web, you need to understand Hypertext Transfer Protocol (HTTP) which explains how a server communicates with a client. There are multiple pieces of information that a message contains that describe the client and how it handles data: method, HTTP version, and headers.

Web scrapers use the GET method for HTTP requests, meaning that they retrieve data from the server. Some advanced options also include the POST and the PUT methods. For details, you can view here a detailed list of the HTTP methods.

Several additional details about requests and responses can be found in HTTP headers. You can consult the complete list of them, but the ones relevant in web scraping are:

- User-Agent: indicates the application, operating system, software, and version; web scrapers rely on this header to make their requests seem more realistic.

- Host: the domain name of the server you accessed.

- Referrer: contains the source site the user visited; accordingly, the content displayed can differ, so this fact has to be considered as well.

- Cookie: keeps confidential information about a request and the server (such as authentication tokens).

- Accept: ensures the response for the server is in a specific type (ex: text/plain, application/json, etc.).

Understanding Java

Java, an open-source, object-oriented language, making it one of the most popular programming languages. Almost two decades have passed since we first encountered Java, and the programming language has become increasingly accessible.

A lot of Java's changes have been aimed at decreasing the code implementation dependencies. Because of this, many developers favor the language, but it has other advantages as well:

- It’s open-source;

- It provides a variety of APIs;

- It’s cross-platform, providing more versatility;

- It has detailed documentation and reliable community support.

Einen eigenen Web Scraper erstellen

Now we can start talking about extracting data. First things first, we need a website that provides valuable information. For this tutorial, we chose to scrape this webpage that shares Italian recipes.

Schritt 1: Einrichten der Umgebung

To build our Java web scraper, we need first to make sure that we have all the prerequisites:

- Java 8: even though Java 11 is the most recent version with Long-Term Support (LTS), Java 8 remains the preferred production standard among developers.

- Gradle: is a flexible open-source build automation tool with a wide range of features, including dependency management (requires Java 8 or higher);

- A Java IDE: in this guide, we will use IntelliJ IDEA, as it makes the integration with Gradle pretty straightforward.

- HtmlUnit: can simulate browser events such as clicking and submitting forms when scraping and has JavaScript support.

After installation, we should verify if we followed the official guides correctly. Open a terminal and run the following commands:

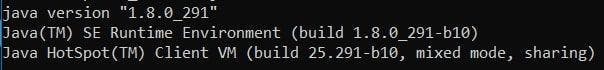

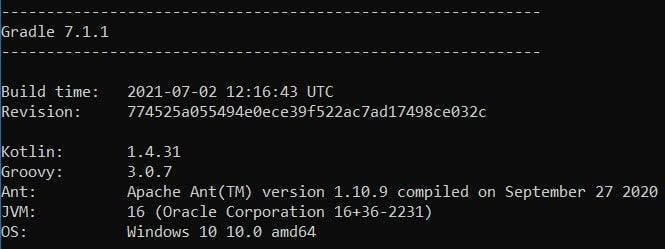

> java -version

> gradle -v

These should show you the versions of Java and Gradle that are installed on your machine:

If there is no error popping up, then we are good to go.

Now let’s create a project so we can start writing the code. Luckily for us, JetBrains offers a well-written tutorial on how to get started with IntelliJ and Gradle, so we don’t get lost throughout the configurations.

Ensure that once you create the project, let the IDE finish the first build, as you will have an automatically generated file tree.

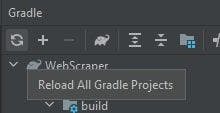

Once it’s done, open your “build.gradle” file and add the following line in the “dependencies” block:

implementation('net.sourceforge.htmlunit:htmlunit:2.51.0')This will install HtmlUnit in our project. Don’t forget to hit the “Reload” button from the right-sided Gradle toolbox so you’ll get rid of all the “Not found” warnings.

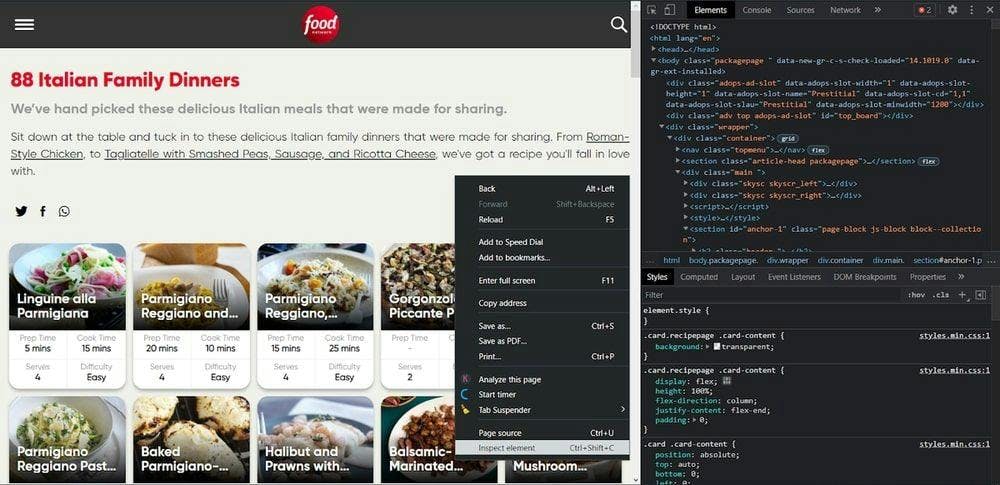

Schritt 2: Überprüfen Sie die Seite, die Sie scrapen möchten

Cool, let’s move on! Navigate to the page you want to scrape and right-click anywhere on it, then hit “Inspect element”. The developer console will pop up, where you should see the HTML of the website.

Schritt 3: Senden Sie eine HTTP-Anfrage und scrapen Sie den HTML-Code

Now, to get that HTML on our local machine, we have to send an HTTP request using HtmlUnit, that will return the document. Let’s get back to the IDE, and put this idea into code.

First, write the imports that we need to use HtmlUnit:

import com.gargoylesoftware.htmlunit.*;

import com.gargoylesoftware.htmlunit.html.*;

import java.io.IOException;

import java.util.List;

Then we initialize a WebClient and send an HTTP request to the website that will return a HtmlPage. It’s important to remember to close the connection after receiving the response, as the process will continue running.

WebClient webClient = new WebClient(BrowserVersion.CHROME);

try {

HtmlPage page = webClient.getPage("https://foodnetwork.co.uk/italian-family-dinners/");

webClient.getCurrentWindow().getJobManager().removeAllJobs();

webClient.close();

recipesFile.close();

} catch (IOException e) {

System.out.println("An error occurred: " + e);

}

It is worth mentioning that HtmlUnit will throw a bunch of error messages in the console that will make you think that your PC will explode. Well, have no fear because you can safely ignore 98% of them.

They are mainly caused by HtmlUnit trying to execute the Javascript code from the website’s server. However, some of them can be actual errors that show a problem in your code, so it’s better to pay attention to them when you run your program.

You can skip viewing a part of these useless errors by configuring some options to your WebClient:

webClient.getOptions().setCssEnabled(false);

webClient.getOptions().setThrowExceptionOnFailingStatusCode(false);

webClient.getOptions().setThrowExceptionOnScriptError(false);

webClient.getOptions().setPrintContentOnFailingStatusCode(false);

Schritt 4: Extrahieren bestimmter Abschnitte

Wir haben also ein HTML-Dokument, aber wir wollen Daten, was bedeutet, dass wir die vorherige Antwort in für den Menschen lesbare Informationen umwandeln müssen.

Starting with baby steps, let’s extract the title of the website. We can do this with the help of the built-in method getTitleText:

String title = page.getTitleText();

System.out.println("Page Title: " + title);

Moving forward, let’s extract all the links from the website. For this, we have the built-in getAnchors and getHrefAttribute methods that will extract all the <a> tags from the HTML and then will retrieve the value of the href attribute:

List<HtmlAnchor> links = page.getAnchors();

for (HtmlAnchor link : links) {

String href = link.getHrefAttribute();

System.out.println("Link: " + href);

}

As you can see, HtmlUnit provides many built-in and self-explanatory methods that spare you hours of reading documentation.

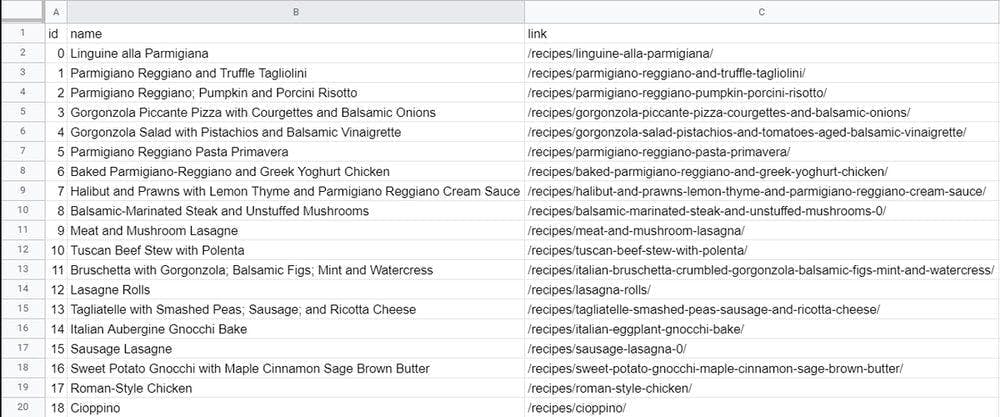

Let’s take a more realistic example. We need to extract all the recipes from the website, their title, and their address more precisely.

If you inspect one of the recipe cards, you can see that all the information we need is through the link’s attributes, which means that all we need to do is look for the links that have the class “card-link” and get their attributes.

List<?> anchors = page.getByXPath("//a[@class='card-link']");

for (int i = 0; i < anchors.size(); i++) {

HtmlAnchor link = (HtmlAnchor) anchors.get(i);

String recipeTitle = link.getAttribute("title").replace(',', ';');

String recipeLink = link.getHrefAttribute();

}This time we use an XPath expression to search for links at any depth through the HTML document. Then, we iterate through the result list and extract the title and the href attribute of each one of them.

Schritt 5: Exportieren der Daten in CSV

This type of extraction can be beneficial when the data should pass to another application, a recipe aggregator in our case. So, to do that, we need to export the parsed data to an external file.

Wir werden eine CSV-Datei erstellen, da diese leicht von einer anderen Anwendung gelesen und mit Excel zur weiteren Bearbeitung geöffnet werden kann. Zunächst nur ein weiterer Import:

import java.io.FileWriter;

Then we initialize our FileWriter that will create the CSV in “append” mode:

FileWriter recipesFile = new FileWriter("recipes.csv", true);

recipesFile.write("id,name,link\n");After creation, we also write the first line of the CSV that will be the table’s head. Now we get back to the previous loop, where we parsed all the recipe cards, and we complete with the following line:

recipesFile.write(i + "," + recipeTitle + "," + recipeLink + "\n");

We’re done writing to the file, so now it’s time to close it:

recipesFile.close();

Cool, das ist alles! Jetzt können wir alle geparsten Daten auf eine saubere, unauffällige und leicht verständliche Weise sehen.

Schlussfolgerung und Alternativen

That concludes our tutorial. I hope that this article was informative and gave you a better understanding of web scraping.

As you can imagine, this technology can do a lot more than fuel recipe aggregators. It’s up to you to find the correct data and analyze it to create new opportunities.

But, as I said at the start of the article, there are many challenges web scrapers need to face. Developers might find it exciting to solve these issues with their own web scraper as it’s a great learning experience and a lot of fun. Still, if you have a project to finish, you may want to avoid the costs associated with that (time, money, people).

It will always be easier to resolve these problems with a dedicated API. Despite all possible blocking points such as Javascript rendering, proxies, CAPTHAs, etc., WebScrapingAPI overcomes them all and provides a customizable experience. There is also a free trial option, so if you aren't quite sure yet, why not give it a shot?

Nachrichten und Aktualisierungen

Bleiben Sie auf dem Laufenden mit den neuesten Web Scraping-Anleitungen und Nachrichten, indem Sie unseren Newsletter abonnieren.

Der Schutz Ihrer Daten liegt uns am Herzen. Lesen Sie unsere Datenschutzrichtlinie.

Ähnliche Artikel

Entdecken Sie die Komplexität des Scrapens von Amazon-Produktdaten mit unserem ausführlichen Leitfaden. Von Best Practices und Tools wie der Amazon Scraper API bis hin zu rechtlichen Aspekten erfahren Sie, wie Sie Herausforderungen meistern, CAPTCHAs umgehen und effizient wertvolle Erkenntnisse gewinnen.

Erforschen Sie die transformative Kraft des Web Scraping im Finanzsektor. Von Produktdaten bis zur Stimmungsanalyse bietet dieser Leitfaden Einblicke in die verschiedenen Arten von Webdaten, die für Investitionsentscheidungen zur Verfügung stehen.

Erfahren Sie, wie Sie mit Hilfe von Datenparsing, HTML-Parsing-Bibliotheken und schema.org-Metadaten effizient Daten für Web-Scraping und Datenanalysen extrahieren und organisieren können.